Cache strategies

The most common cache strategies that everyone should know

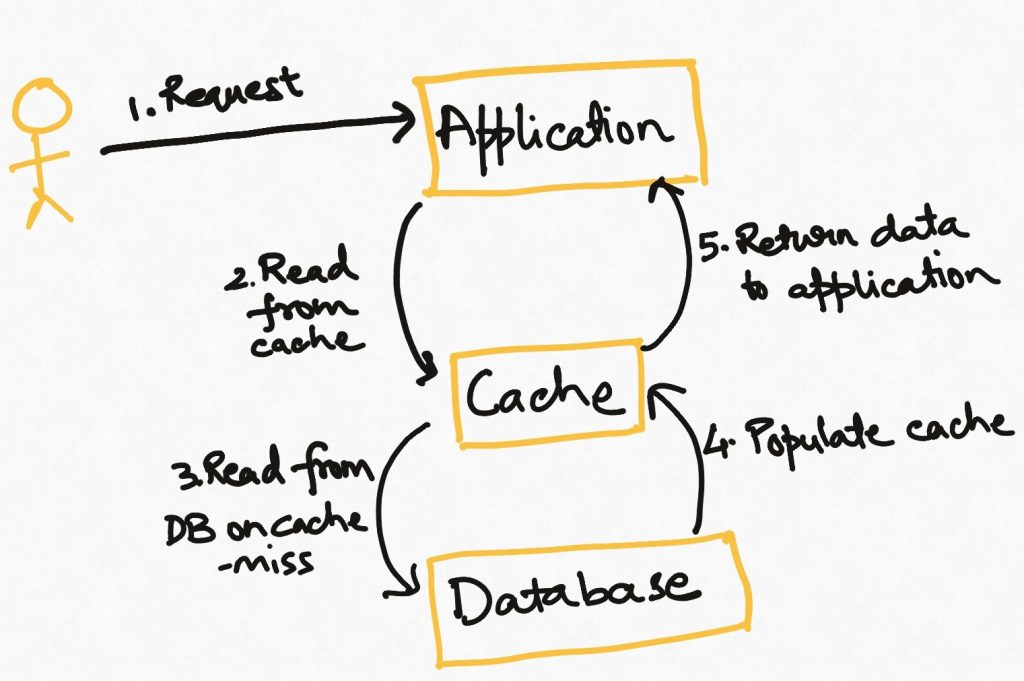

1. Read through

This is the simplest and most commonly used strategy. The application tries to read the data from the cache. If it finds the data (known as “cache hit”), all is well. If it doesn’t find the data in the cache (known as a “cache miss”), it goes to the source to fetch it and loads it in the cache. If the cache is full, then some policy based on the nature of the data (e.g. Least frequently used, most recently used) is used to identify the data which should be removed from the cache to make room for the incoming data.

In this strategy, tasks that encounter a cache miss will have a higher latency than those that get a cache hit.

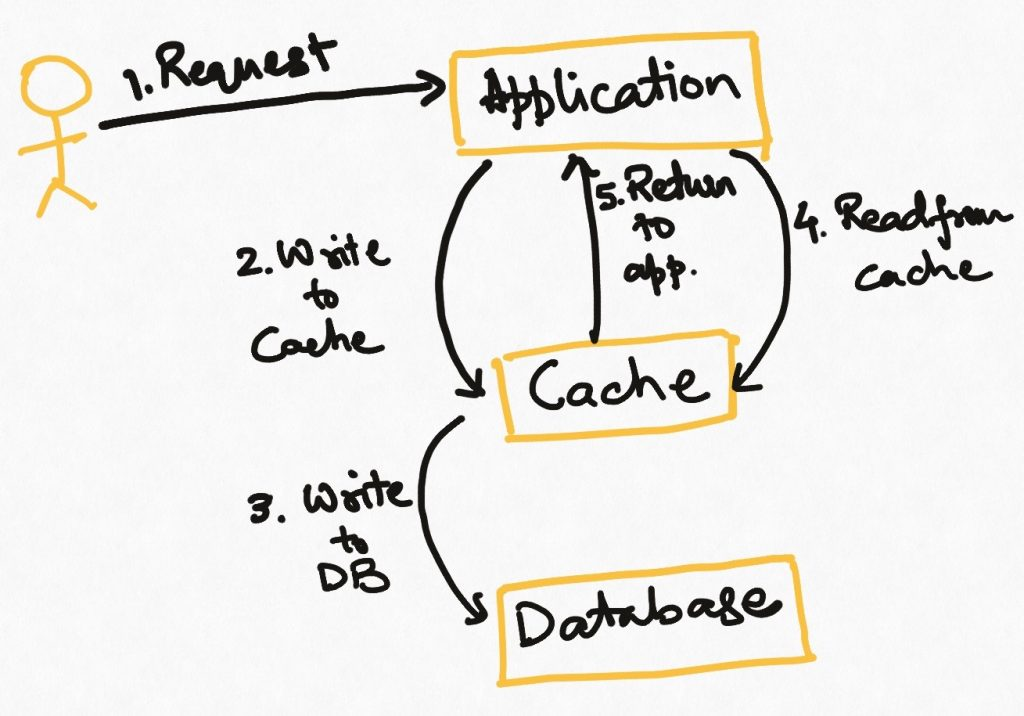

2. Write through

In applications where the cache can hold all the data and is expected to be always fresh, we can use the write-through pattern. In this, every write is first done to the cache, and then to the source. This means that the cache is always in sync with the source. The cache becomes the source of truth for the application and it never reads the data from the source.

On the flip side, this requires the full data to be loaded in the cache at the outset. It also introduces higher latencies on the write operations, and higher write load on the cache system, which may, in turn, impact its read performance.

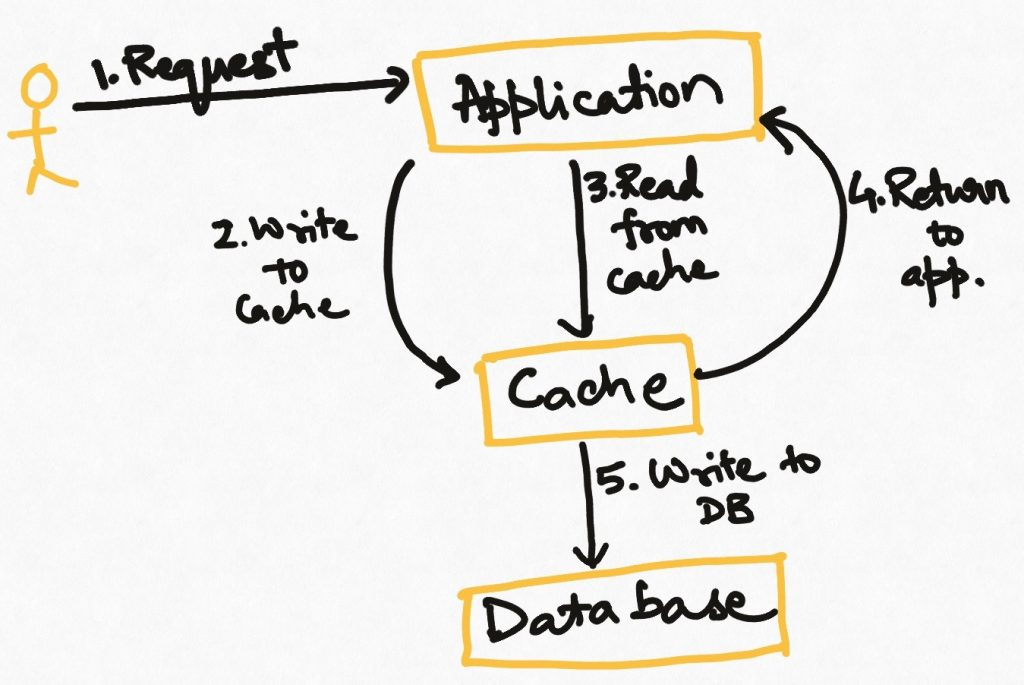

3. Write Behind

Similar to the write-through strategy, the application first new data to the cache. But after that, the application process returns to its main duties. The cache itself or some other process runs periodically and batch-writes the cache data into the source.

This is an effective strategy for cases where we do not want to bear the latency cost of writing to the source in the main application process and the cache is reliable enough that we are sure of not losing the data before it is pushed to the source. This strategy requires that writes to the source never fail while dumping data from the cache, or there be a resolution mechanism to resolve inconsistencies.

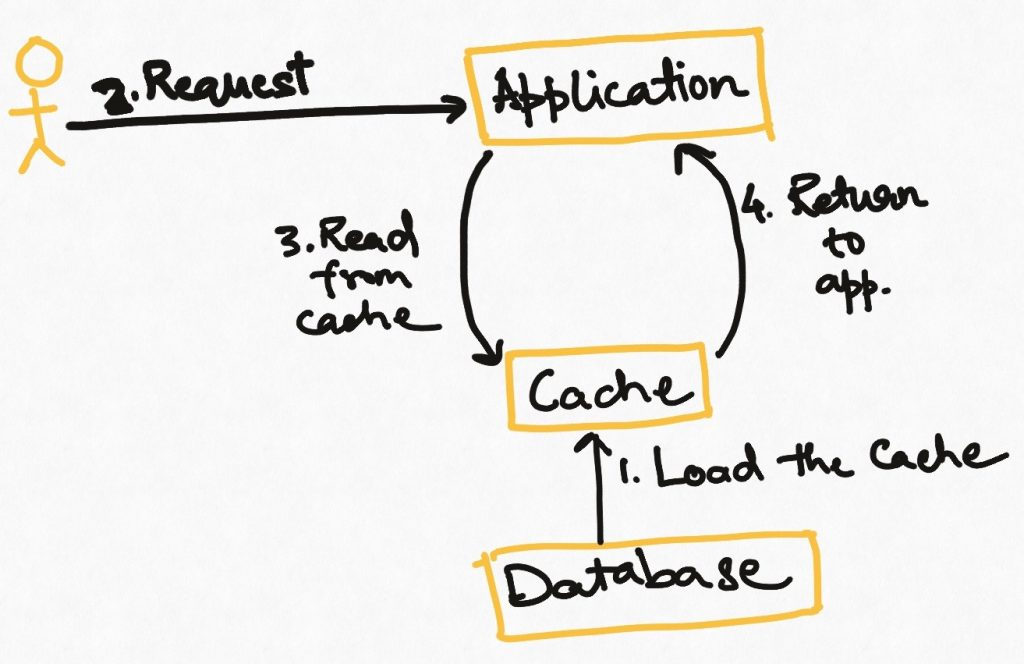

4. Refresh Ahead

In this strategy, we pre-emptively refresh all or part of the data of a cache as it is reaching its expiry time. How to decide what to reload is up to the application. Note that the application may still face cache misses if not all data can be reloaded, and there this technique is usually combined with “read-through caching but with the idea that reloading process should make cache misses rarer.

This is not a very common technique because it requires setting up a process to identify expiring data and reloading it based on some smart logic. This is usually not needed by applications.

Happy coding!